Artificial general intelligence (AGI) is the hypothetical super AI of the (maybe) near future. Its advent would be so strange and transformative, that only the more out-there worldviews have a take on it.

Here are three gaining prominence in certain circles of the Internet.

TESCREAL. A heinous acronym I won’t spell out, it’s basically your rationalist, longtermist, effective altruist types who see AGI as an existential threat. Hell’s-easier Yudkowsky is something of a figurehead. You might have read or seen his warnings. At the root of rationalist politics is a commitment to feeling good. For many rationalists (not all, but I’m rounding off here for this single paragraph explaining their worldview) their utilitarian outlook means the highest and perhaps only good is positive subjective experience. So the goal is more sentient beings (extend human civilisation so more people end up being born) experiencing greater highs (find ways to hack our nervous systems’ rewards) for longer (strive for biological immortality).1 This makes them wary of things that could scupper this plan for extending bliss throughout the galaxy, i.e. existential risks like pandemics, nuclear war, and AGI.

e/acc. Pronounced ee-ack. Stands for effective accelerationism. It’s in contrast to effective altruism (q.v. TESCREAL above) which may want to slow things down and take precautions to avoid armageddon. The e/acc crowd, fuelled by Diet Coke and the natural high you get from weekly advances in AI and the occasional room-temperature superconductor, want to speed things up:

Bring on AGI because it will hasten the universal process that we’re all a part of: the consumption of free energy from the stars and the inexorable rise of entropy. No immortality for us. The greatest good is to be a part of the cosmic churn, the exploration of the landscape of possible forms, and the evolutionary forces that create complexity even amid a global decline from the universe’s low entropy beginning. Is AGI potentially dangerous? Certainly. But only technological advancement that is more rapid still can equip us to deal with new problems: excelsior!”

This is not an actual quote but gives the gist.

Postrats. As in post-rationalists. Many of them were once in the orbit of the rationalism described above but found the worldview to be too hardcore, too cerebral, and (ironically for an ideology dedicated to bliss) lacking any nourishment for the spirit. Now they generally advocate a vague spiritualism to temper a broadly rational worldview. Meditation, being in nature, larping at traditional religion, psychedelics, and just a frisson of occultism are all hallmarks of postrats in “TPOT” (this part of Twitter). David Chapman is a well known thinker associated with the label. His work is excellent, actually. But in concentric circles outward from him, it gets loopier and ends up in Jordan Peterson territory, or worse. Not my bag. But the people I follow who are postrats are impressive and sincere. On AGI, they tend to be worried about it killing us but also a little sceptical of the technology because it’s not rooted in the embodied, biological intelligence that we conscious humans are able to meditate upon.

If you’re not familiar with any of this, these ideas may sound outlandish, even scandalous. Maybe this matches them with the new material. AI is extraordinary and will presumably become more so.

Quick interlude with some examples

For those wanting to see some of these views in the wild here are some links to tweets. Otherwise just skip down to the next section.

Here’s an exchange between Geoffrey Miller and Hell’s-easier Yudkowsky, who doesn’t think much of a typical human life but who, to be fair, has thought about the topic. I think it’s interesting that Miller, who’s a very good evolutionary biologist and, relatedly, unlikely to win any awards for woke ally of the year, is representing Team Humanity against views that would be considered horrifying by the mainstream left.

The thread they’re responding to is by Dave Krueger, a quite sane voice in AI alignment research. Krueger points out how many people working in AI are Team Supplant. Some of the replies include quotes from “Beff Jezos”, the main e/acc guy, so you can see where they’re at.

And here’s a long tweet by Andrew Critch, an AI guy of the TESCREAL persuasion, pointing out how many people in AI want to replace humans. I think his ideas about how to deal with such views are based on a bunch of dubious assumptions but at least he’s acknowledging that these views are out there.

Perhaps all worldviews are scandalous

These new worldviews should not be discredited just because they’re exotic. The average person also has an outrageous worldview.

Our deeds are tallied and, at the end of life, some omniscient auditor sends us to either an eternal torture chamber or a perpetual party.

Noncorporeal versions of dead people subtly interact with the physical world in order to seek revenge or protect their old property.

If one lives according to the right ethics, one’s essence can be transferred at death into a new, higher form, until one escapes the whole circus into endless peace.

Zygotes are implanted with a soul at the moment of conception, thereby granting moral personhood to a single cell.

A cabal of paedophiles run the US government.

Any moment now the Elect will be sucked up to heaven, while we sinners duke it out in a hopeless war of all against all.

You could come up with your own examples. And most people would find my worldview unintuitive, shocking, and fanciful. How could it be otherwise? We view others’ worldviews through the bent prism of our own crackpot worldview.

All serious worldviews are extravagant. They have to be if they purport to capture reality. The stakes of reality are always ultimate. This is why extremism is pregnant within almost any belief system or ideology. It’s especially close to the surface, however, any time someone posits infinite value in the future.2 I can easily imagine someone taking longtermism or the e/acc worldview too literally. They would reason, coherently, that potentially infinite ends in the future justify any means in the present. These would include terrorist attacks, holy wars, imprisonments, even genocide.

Hey, someone should come up with a worldview that sort of takes into account the presence of other worldviews and that maybe takes its own claims to understand the whole world with a grain of chill pill.

These new worldviews seem illiberal

I don’t feel an affinity with any of them. Something is missing. The only group that would seem to value human interests and sovereignty are the postrats. But I’m left cold by their focus on conscious experience as the centre of moral gravity. It’s the problem I have with Buddhism and adjacent ideas: the world as illusion or maya; the only reality within the moment and the breath. This aloofness from the world strikes me as untenable in a scientific age. The whole world is in flux and we are an increasingly significant part of the physical transformations that happen beyond our minds. That includes our despoliation of the Earth but also our vaccines, our music, our cities. Perhaps what we do is, in the final analysis, more important than how we feel.3

The other groups are too eager for AGI to supplant us. This is where our indignation should kick in. A glance at the history of supplantings suggests it’s rarely painless for the supplantee and never voluntary. TESCREAL types speak of the day when we merge with technology via mind uploading or by becoming cyborgs. A “graceful exit” would see AGI take over the shop while we retire to the scenario from the “San Junipero” episode of Black Mirror. The e/acc hacks, meanwhile, want us to die with valour, building the technological pyramids of an advanced civilisation for our AGI pharaoh to inherit.

To resist this, it’s not that you have to be an old-school humanist who says there’s some indomitable spark in the human breast or whatever. You just have to be a basic, no-frills liberal of any kind. The outrage should come from the presumption that people will have no say over whether or not they’re replaced. In the futures imagined by speculators on blogs and X-Twitter, it’s not as if the replacement is put to a referendum. It is assumed we would simply make way, possibly uploading our consciousness to the cloud, while the AGI pinky promises they won’t switch off the server.

The missing quadrant

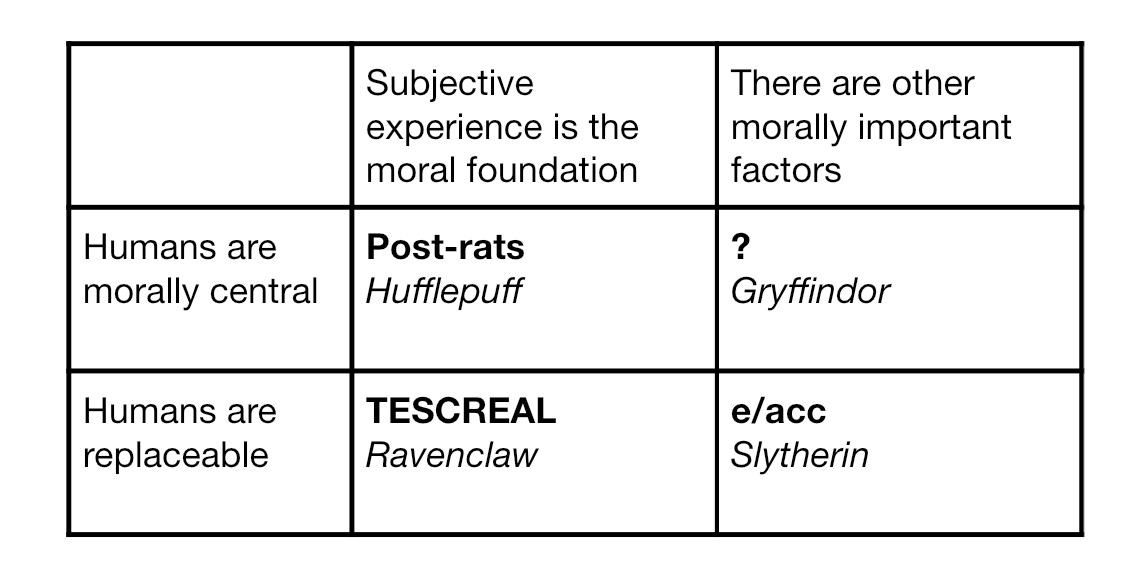

Trying to put my finger on how these worldviews relate and what’s missing, I derived this little 2x2 table complete with a lame Harry Potter framework.

The top right quadrant needs filling. It awaits a champion of the human; not humanism, which has been fed on from all sides, but something that prioritises self-determination and pluralism.

Many groups and people do so. But such advocacy seems to coincide with a luddite mentality — or an understandable reluctance to get into the speculative thought required for arguing over what kinds of cyborgs should be allowed to vote. But I’d be more encouraged if there was more liberalism (vaguely defined) in this discourse. We should oppose the logic of totality embodied in the anti-humanist views of rationalists, who would have us abort lives that fail to meet some threshold of hedonic minimum wage, and in the breezy call for human deracination offered by e/acc goons, who want to donate our carbon to build Dyson spheres.

They do also want to eliminate suffering: the negative utilitarian credo. That’s why effective altruism began with efforts to donate to charities that were most competent at preventing disease and other unimpeachable causes. Some years ago now, they pivoted to longtermism because of the arithmetic involved in valuing future lives. Even if you have a discount rate (value future lives as less important than the current generation) most estimates assume that there could be orders of magnitude more humans yet to be born so it overpowers any weighting towards the present. The inescapable conclusion is that every scintilla of our energy now should be spent shoring up the longterm future because that’s where all the ethical gains can be made.

This is one major motivation behind my sceptical worldview and why it sustains pluralism. I don’t know if my or any other worldview is right; therefore I need a portfolio of other people who disagree with me and I’m thankful they do. I don’t want a world where everyone thinks I’m right. From what I’ve seen, most people want their worldview to triumph or be accepted by all. They certainly don’t want their own worldview to change.

Big claim. Can’t unpack here. Will do so in my book.

What is your main problem with the "luddite mentality"? Perhaps this is something to address in a future post...

This conflict about doing vs. being you bring up is pretty central to a great essay by Arthur Koestler called The Yogi and the Commissar. You seem to be leaning very hard into the direction of the commissar, but I think the real ideal is the Jedi Knight, the union of the Yogi and the Commissar, a zen mind and yet very capable of acting upon the world.

Perhaps that is the Gryffindor quadrant?